Introduction

Brain tumors are among the most aggressive and lethal forms of cancer, and early detection is crucial for improving patient outcomes. However, analyzing medical images to diagnose and classify brain tumors presents several challenges due to the sheer size and complexity of whole-slide images (WSIs). In this article, I will share insights from my project, Converting Large Medical Images to Embeddings for Training Classifier Models, where I leveraged deep learning techniques to process high-resolution medical images efficiently. This is not just a technical breakdown but a real-world experience of tackling the problem, highlighting key lessons and takeaways.

The Challenge of Large-Scale Medical Image Analysis

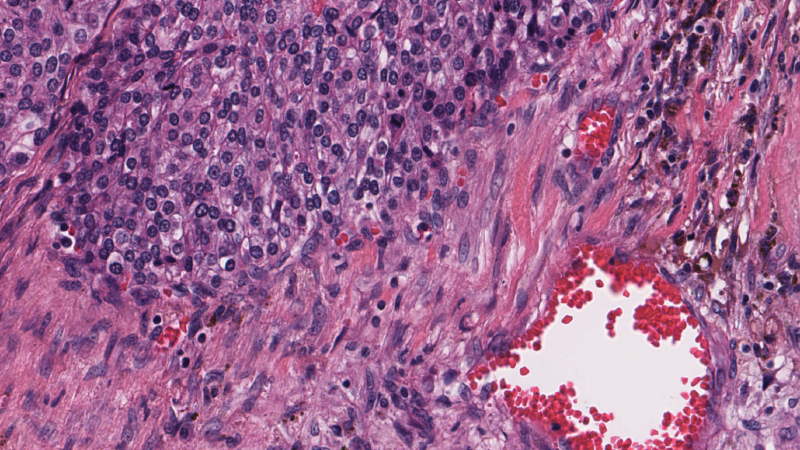

Medical images, particularly WSIs, are massive, often exceeding 100,000 pixels in resolution. Traditional image classification methods struggle to process such data due to memory constraints and computational complexity. My goal was to convert these high-dimensional images into meaningful embeddings that could be used for training classifier models to predict immune invasion stages in glioblastoma—a highly aggressive brain tumor.

Key Challenges

- Data Size & Complexity: WSIs are gigapixel images that require efficient handling and storage.

- Annotation Scarcity: Unlike natural images, medical images require expert annotations, which are often limited.

- Feature Extraction: Extracting meaningful representations from these images without losing critical information.

- Computational Constraints: Training deep learning models on such large images is resource-intensive.

The Solution: Transforming Images into Embeddings

To address these challenges, I explored and adapted two state-of-the-art deep learning approaches:

Deep Attention Multiple-Instance Survival Learning (DeepAttnMISL)

DeepAttnMISL is a multiple-instance learning (MIL) approach designed for survival prediction from WSIs. Instead of classifying entire images at once, it breaks them into smaller regions (instances) and learns representations using an attention-based mechanism. Key steps included:

- Patch Extraction & Clustering: Extracting patches from WSIs and grouping them into phenotype clusters.

- Feature Extraction via CNNs: Using a pre-trained VGG model to generate feature embeddings for each patch.

- Attention-Based Pooling: Aggregating patch-level information using an attention-based MIL pooling layer to make patient-level predictions.

- Final Classification: Using the learned embeddings to train a classifier model to predict immune invasion stages (A, B, C, D).

Vision Transformers (ViTs)

Inspired by their success in NLP, I also explored Vision Transformers (ViTs), which process images as sequences of patches rather than relying on convolutions. ViTs leverage self-attention mechanisms to capture long-range dependencies, making them particularly suited for analyzing complex medical images.

- Patch Tokenization: Splitting the large image into smaller fixed-size patches.

- Embedding Generation: Encoding each patch into a vector representation.

- Self-Attention Mechanism: Learning relationships between different patches to detect patterns indicative of tumor presence.

- Classifier Training: Using the learned representations to train a predictive model.

Key Takeaways

The Importance of Representation Learning

Converting images into embeddings significantly reduced the computational burden while preserving essential features. Choosing the right architecture for embedding extraction was crucial—DeepAttnMISL provided structured phenotype-based representations, while ViTs captured global dependencies.

Attention Mechanisms Enhance Interpretability

Using attention-based pooling allowed us to identify the most critical regions of the WSIs, improving both accuracy and interpretability. This was particularly useful for medical experts who need to understand model predictions.

Pretrained Models Save Time & Resources

Instead of training deep networks from scratch, leveraging pretrained models (e.g., VGG, ResNet) for feature extraction proved highly effective. Fine-tuning these models with domain-specific data further improved performance.

Computational Constraints Are a Real Challenge

Processing high-resolution WSIs required significant memory and GPU resources. Using techniques like patch extraction, dimensionality reduction, and efficient batching helped mitigate these challenges.

Conclusion

Analyzing medical images for brain tumor detection is a complex but highly impactful challenge. By leveraging DeepAttnMISL and Vision Transformers, we can efficiently extract meaningful embeddings that improve classification accuracy while reducing computational costs. This project highlighted the power of attention mechanisms in deep learning and underscored the importance of adapting models to the unique constraints of medical imaging.

For those interested in deep learning applications in healthcare, this field offers vast opportunities to push the boundaries of AI-driven diagnostics. Whether you’re a researcher, practitioner, or enthusiast, the key takeaway is clear—smart representation learning is the future of medical image analysis.

What are your thoughts on AI in medical imaging? Have you worked on similar projects? Let’s discuss in the comments!